Improving the success rate of deploying an integration by 88%.

Eliminated key UX blockers in integration deployment, enhancing the user experience while enabling IBM App Connect SaaS’s new pricing model.

Top 5 skills utilised

Project overview

March 2023 - February 2024

Aligning on the problem & scoping

We combined business requirements with findings from UX audits and user testing to maximize our impact from the get go. These insights were utilised in an alignment and ideation workshop, ensuring our proposed story addressed both business and user needs.

UX audit on IBM App Connect SaaS

When I joined the product, I conducted a full UX audit of the end-to-end experience. As part of this audit, I identified 21 usability issues in the integration deployment process alone.

I presented these findings to over 30 stakeholders across App Connect Design, Engineering & PM.

The significant number of issues ultimately secured crucial user testing budget to gather key user insights on the product.

Below are my top 3 usability issues I found:

User testing on the existing IBM App Connect SaaS experience

Before our design team was formed, IBM App Connect SaaS had already been released. Knowing that limited user testing had been done, we wanted to evaluate the existing experience with users.

I collaborated with IBM Research to define the key experiences we needed testing, including the integration deployment process. 12 participants were sourced to identify critical experience blockers to address in this project.

The users we needed to optimise this experience for, in line with business goals, were business analysts (non-technical business users) which we refer to as “Tia”, while still catering for integration specialists (technical users) a.k.a “Isaac”. Tias had much less success than Isaacs, highlighting that we have a long way to go to optimise this experience for new non-technical business users.

Although we gained many useful insights, the 3 leading blockers found are provided below:

New pricing plan requirements

1. Abstracts the concept of runtimes away from the user; making them IBM-managed.

2. Usage is based on number of times an integration is run, rather than the resources consumed.

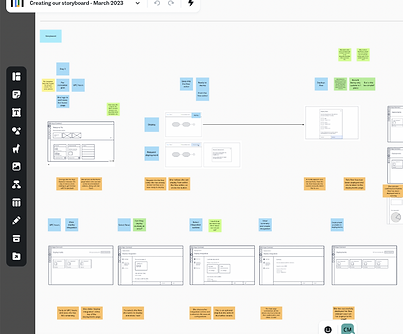

Kick off workshop

I ran a remote, cross-functional two-day workshop with designers, engineers, and PM to quickly align, iterate, and define a high-level user story for deploying integrations under the new pricing model. Informed by research and UX audits, the story was shared across teams to drive alignment, gather feedback, and kick off detailed designs and further testing.

Ideation

This section demonstrates how the designs evolved in response to restrictions, scoping, and further user testing. While there were many more smaller feature enhancements, rounds of iterations and playbacks than are shown here, these are the most important ones to call out.

Although I led the majority of the UX design, I used this project as a hands-on mentoring opportunity for our studio’s UX apprentice; guiding them through real-world challenges while ensuring we delivered high-quality work.

Early designs

Here are some examples of the early designs and how they addressed the key experience issues identified earlier.

Restrictions & Solutions throughout the ideation phase

Restriction 1

We were told we couldn't contain deployment initiation within the authoring experience due to engineering constraints and we were concerned other items might take priority so we needed evidence that this was needed.

Solution

Additional user testing (9/9 success rate) confirmed key experience blockers were resolved. However, it revealed that users’ mental model prioritises finding an integration before deploying it; not the other way around. This insight drove prioritisation of the feature for the second half of the year.

Restriction 2

Combining the authoring and deploy experiences wasn’t feasible due to the architecture of the Software and Container offerings, which were out of our control.

Solution

Given the scale of App Connect and the significant revenue from other offerings, re-engineering the shared codebase was unfeasible. Despite the desire to enhance the SaaS offering, I recognised that pushing for this change would have jeopardised the broader business, so I refrained from pursuing it; meaning authoring and deployment management were kept separate.

Implementation of designs

Here are the key stages of implementation for this project.

MVP (Phase 1)

These were the minimum designs required to address the critical experience blockers identified.

The engineering team was a newly formed group from India, so we implemented weekly remote check-ins to ensure collaboration, feedback sharing, and ongoing discussions. This allowed them to provide input not only on implementation but also on the latest designs. We structured the handover process in sprints to maintain flexibility in delivery.

I was responsible for all new UX updates as part of this (our apprentice transitioned off the project before we got to UX refinement), with support from visual design for general guidance adherence.

Enhancements (Phase 2)

Once the MVP was implemented, we moved on to additional enhancements, such as introducing the ability to deploy during the authoring experience.

By this point, the design and engineering teams were working together efficiently to deliver the project.

My contributions to this stage mirror phase 1; I was responsible for all UX updates/decisions.

To our delight, this pricing plan became the most favoured plan to demo (over the existing one) due to how much more seamless the user experience was for sales and customers to understand. This feedback validated our input.

What about the existing plan with that outdated UI full of experience issues? (Phase 3)

After delivering a vastly improved experience for the new pricing plan, which received strong praise from the wider team, we turned our focus to the outdated UI and persisting blockers in the existing plan.

We successfully pushed to prioritize this in the second half of the year. With buy-in from PM and the engineering lead, it became the top priority in the backlog, allowing us to allocate resources for additional designs and front-end development.

We needed to consider how runtimes played a part in the new experience so, although the experience was as consistent as possible, we had some additional designs here to produce.

This required me to level up my technical understanding further as runtimes are incredibly complicated; from understanding the need for containerisation to advanced configurations. This meant more advanced UX design was required on my end.

By the end of this stage, engineering had fully implemented all design updates; leading to a drastically improved experience.

Project feedback

Samples of some feedback received on my input on the project.

Design Lead, IBM App Connect SaaS

"You fully embrace the value of data for making decisions. You are always asking me for research and you’re 100% focussed on creating designs that solve user problems. For example, the usability study for deploy - you listened to the problems, then created designs to solve them, then you listened to the feedback on those designs and iterated again."

Architect, IBM App Connect

"Now that we are at the point of implementation, I am also really grateful for the time you are spending with the engineering team and I know that they really appreciate everything you are doing and being available for them - would be great to keep this relationship and dynamic continuing moving forwards."

Engineering Manager, IBM App Connect

"We had a very pleasant experience working with you. The regular calls and the Slack conversations were very effective to share progress, learn, clarify doubts and discuss any changes. Never felt like it was a new team we are working with. All the conversations we had were very clear and efficient. We got all the required design input well on time, sometimes even before in our handover calls, to meet development commitments."